Investigating Adaptive Hand Visibilities for Accurate 3D User Interactions in Augmented Reality Misc

Rumeysa Turkmen, Nour Hatira, Robert J Teather, Marta Kersten-Oertel, Wolfgang Stuerzlinger, Anil Ufuk Batmaz

Abstract:

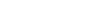

Augmented Reality (AR) tasks that require fine motor control often rely on visual hand representations, yet fully visible hands can introduce occlusion and reduce accuracy. In this work, we investigate adaptive hand visualization techniques that dynamically adjust hand visibility based on the current interaction phase to improve accuracy in AR. We designed an AR-based pedicle screw placement task, in which users insert virtual screws into a physical spine model. To evaluate how hand visualization affects task performance, we conducted a user study with 15 participants who performed the task under five hand visualization conditions: opaque, transparent, invisible, speedbased and positionbased visibility. Our results show that adaptive visualization techniques significantly improve screw placement accuracy compared to the commonly used opaque hand representation. These findings demonstrate the benefits of dynamically adapting hand visibility can support accuracy-critical interactions.