Predicting Ray Pointer Landing Poses in VR Using Multimodal LSTM-Based Neural Networks Inproceedings

Wenxuan Xu, Yushi Wei, Xuning Hu, Wolfgang Stuerzlinger, Yuntao Wang, Hai-Ning Liang

Abstract:

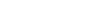

Target selection is one of the most fundamental tasks in VR interaction systems. Prediction heuristics can provide users with a smoother interaction experience in this process. Our work aims to predict the ray landing pose for hand-based raycasting selection in Virtual Reality (VR) using a Long Short-Term Memory (LSTM)-based neural network with time-series data input of speed and distance over time from three different pose channels: hand, Head-Mounted Display (HMD), and eye. We first conducted a study to collect motion data from these three input channels and analyzed these movement behaviors. Additionally, we evaluated which combination of input modalities yields the optimal result. A second study validates raycasting across a continuous range of distances, angles, and target sizes. On average, our technique's predictions were within 4.6° of the true landing pose when 50% of the way through the movement. We compared our LSTM neural network model to a kinematic information model and further validated its generalizability in two ways: by training the model on one user's data and testing on other users (cross-user) and by training on a group of users and testing on entirely new users (unseen users). Compared to the baseline and a previous kinematic method, our model increased prediction accuracy by a factor of 3.5 and 1.9, respectively, when 40% of the way through the movement.