User-defined Surface+Motion Gestures for 3D Manipulation of Objects at a Distance Through a Mobile Device Inproceedings

Hai-Ning Liang, Cary Williams, Myron Semegen, Wolfgang Stuerzlinger, Pourang Irani

Abstract:

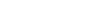

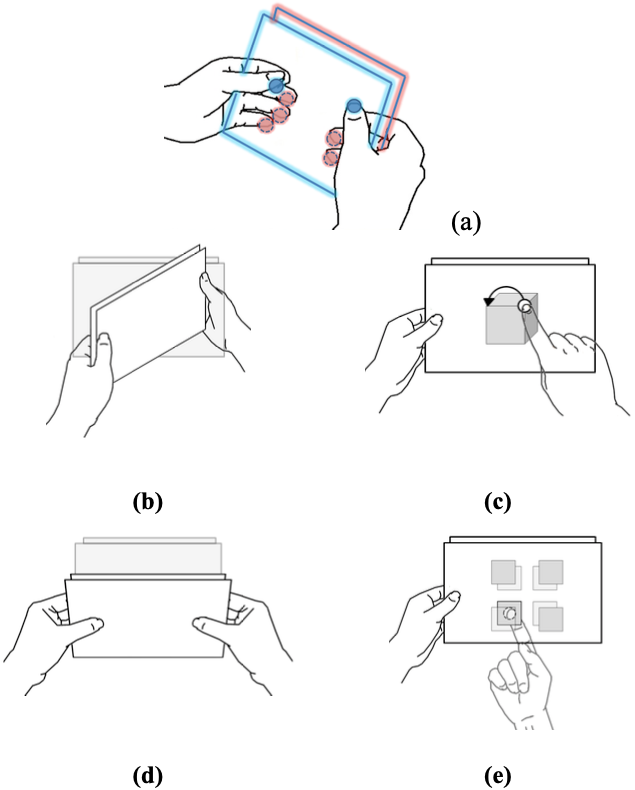

One form of input for interacting with large shared surfaces is through mobile devices. These personal devices provide interactive displays as well as numerous sensors to effectuate gestures for input. We examine the possibility of using surface and motion gestures on mobile devices for interacting with 3D objects on large surfaces. If effective use of such devices is possible over large displays, then users can collaborate and carry out complex 3D manipulation tasks, which are not trivial to do. In an attempt to generate design guidelines for this type of interaction, we conducted a guessability study with a dual-surface concept device, which provides users access to information through both its front and back. We elicited a set of end-user surface- and motion-based gestures. Based on our results, we demonstrate reasonably good agreement between gestures for choice of sensory (i.e. tilt), multi-touch and dual-surface input. In this paper we report the results of the guessability study and the design of the gesture-based interface for 3D manipulation.